Your (neural) networks are leaking Researchers at universities in the U.S. and Switzerland, in collaboration with Google and DeepMind, have published a paper showing how data can leak from image-generation systems that use the machine-learning algorithms DALL-E, Imagen or Stable Diffusion. All of them work the same way on the user side: you type in a specific text query — for … [Read more...] about Neural networks reveal the images used to train them

Neural

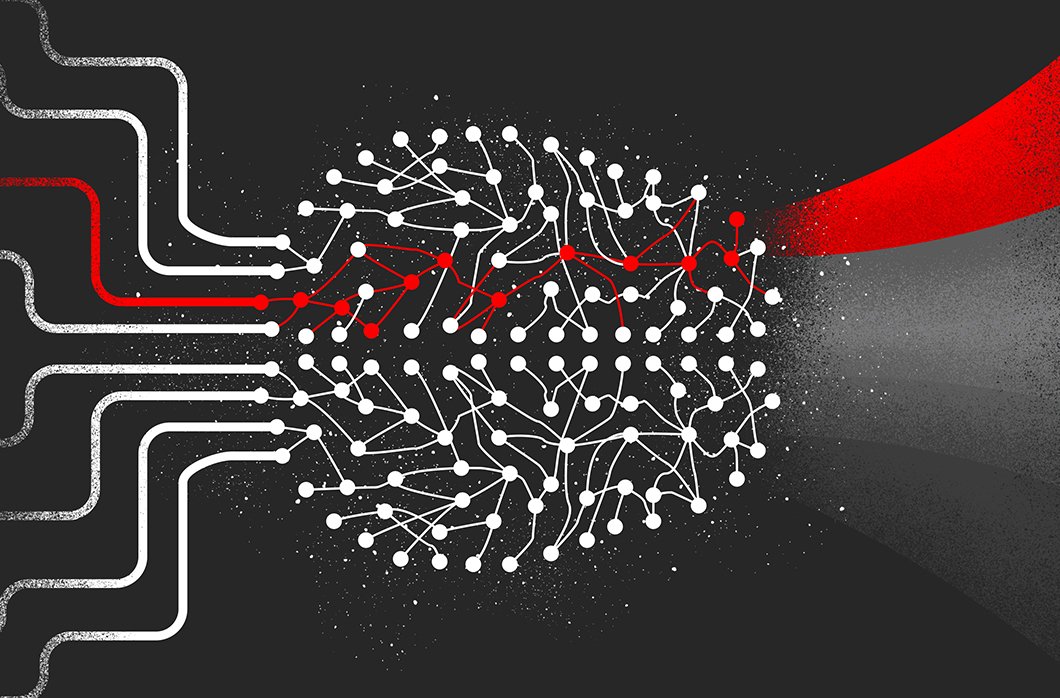

Malware Through the Eyes of a Convolutional Neural Network

Motivation Deep learning models have been considered “black boxes” in the past, due to the lack of interpretability they were presented with. However, in the last few years, there has been a great deal of work toward visualizing how decisions are made in neural networks. These efforts are saluted, as one of their goals is to strengthen people’s confidence in the … [Read more...] about Malware Through the Eyes of a Convolutional Neural Network